In the IAS program, we aim to advance the research on intraoperative AI-AR assisted surgery by developing a set of novel and efficient algorithms to tackle some fundamental yet challenging problems in offering precise and effective intraoperative guidance. Based on these algorithms, incorporating the algorithms developed in the IPD and IVS programs, we will develop three AI-AR IAS systems for some representative surgical procedures for liver/kidney cancer treatment.

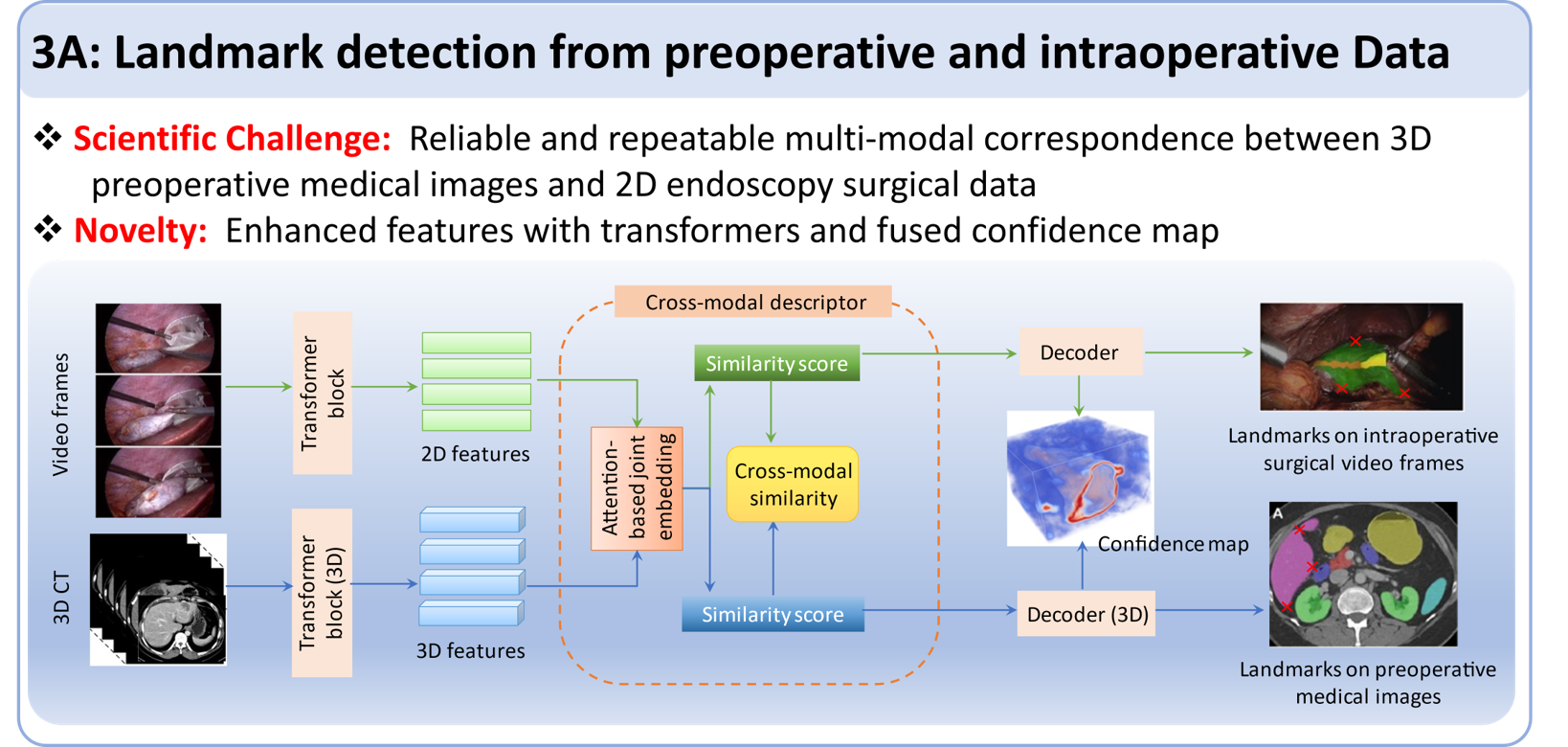

Identifying reliable and repeatable landmarks from both medical images and surgical videos is crucial for precise intraoperative registration. However, conventional landmark detectors and descriptors are specifically designed either for RGB images or medical images. There is no existing technique that can be effectively harnessed to establish multi-modal correspondence between 3D medical images and 2D surgical video frames. Building upon our prior expertise in learning 2D-3D correspondences, we propose to automatically learn multi-modal landmark detectors and descriptors for reliably recovering corresponding landmarks.

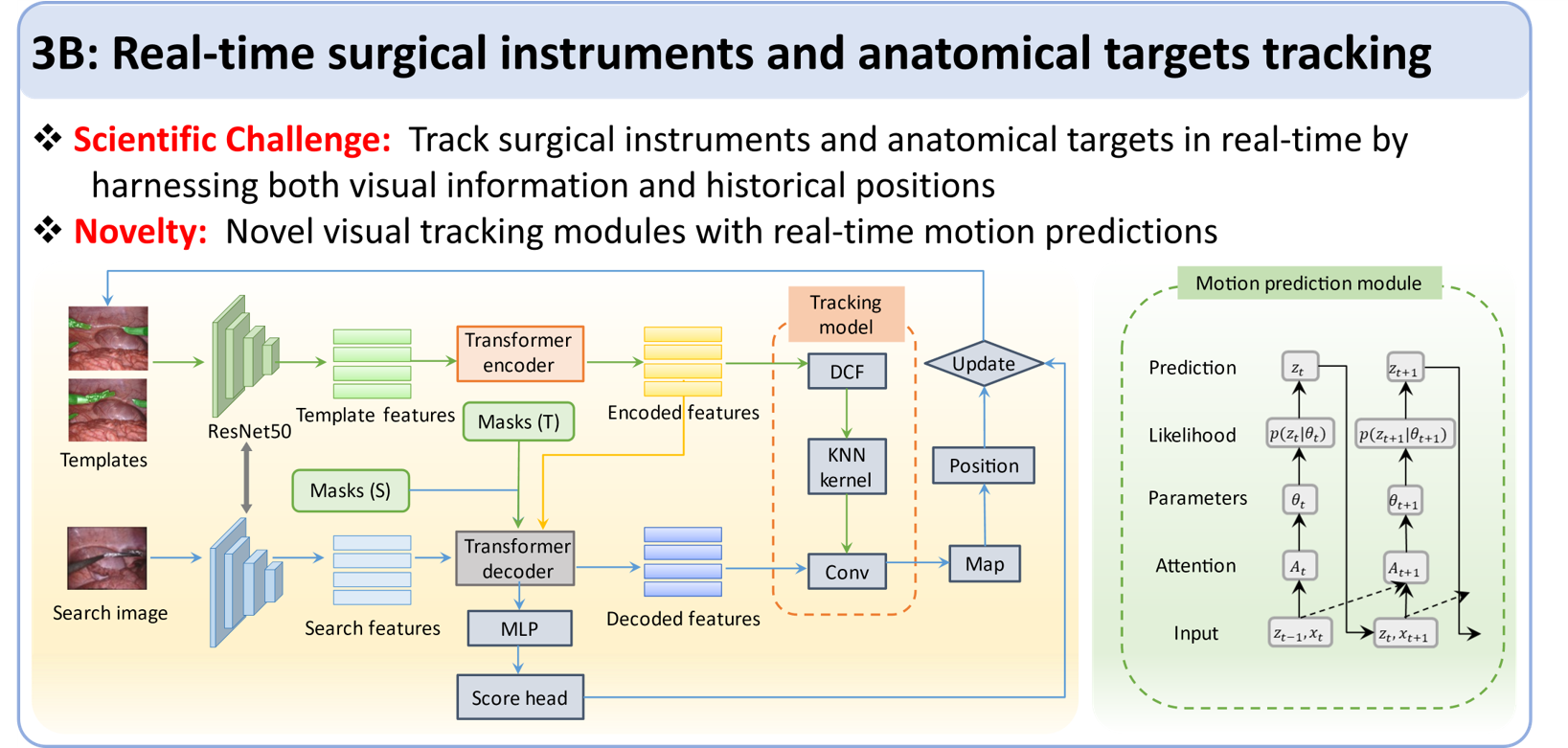

Another critical component of the AI-AR assisted surgery system is the accurate intraoperative tracking of surgical instruments and anatomical targets. We will propose a set of novel algorithms under the guidance of ultrasound images. The proposed algorithms are sufficiently general to be easily extended to other imaging modalities. As the surgical instruments or anatomical targets may change their appearance dramatically or even completely disappear from the available images during the procedure, traditional visual information-based trackers are incapable of precisely and continuously tracking the targets. We propose to develop a novel hybrid tracking system that consists of a deep learning-based visual tracking module and a motion prediction module.

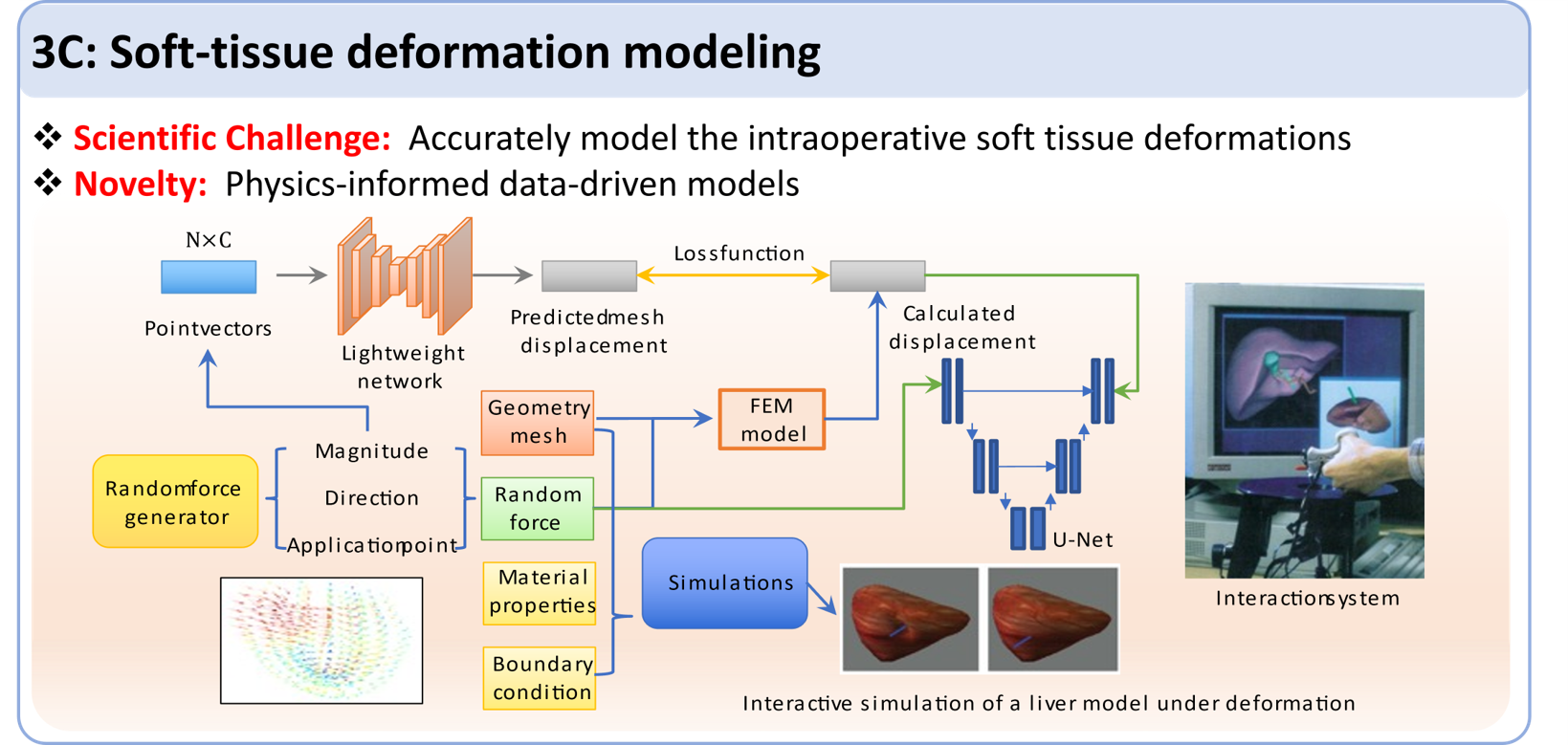

To facilitate the non-rigid registration of the multiple modalities in minimally invasive surgeries, the deformation of soft tissues needs to be accurately determined. Finite element methods (FEM) are widely applied for deformation modeling. However, these cannot achieve a good balance between efficiency and accuracy in complicated surgical procedures. We will propose a lightweight deep learning framework to achieve accurate and real-time soft tissue deformation. It will be composed of two modules: efficient deformation modeling and real-time interaction.

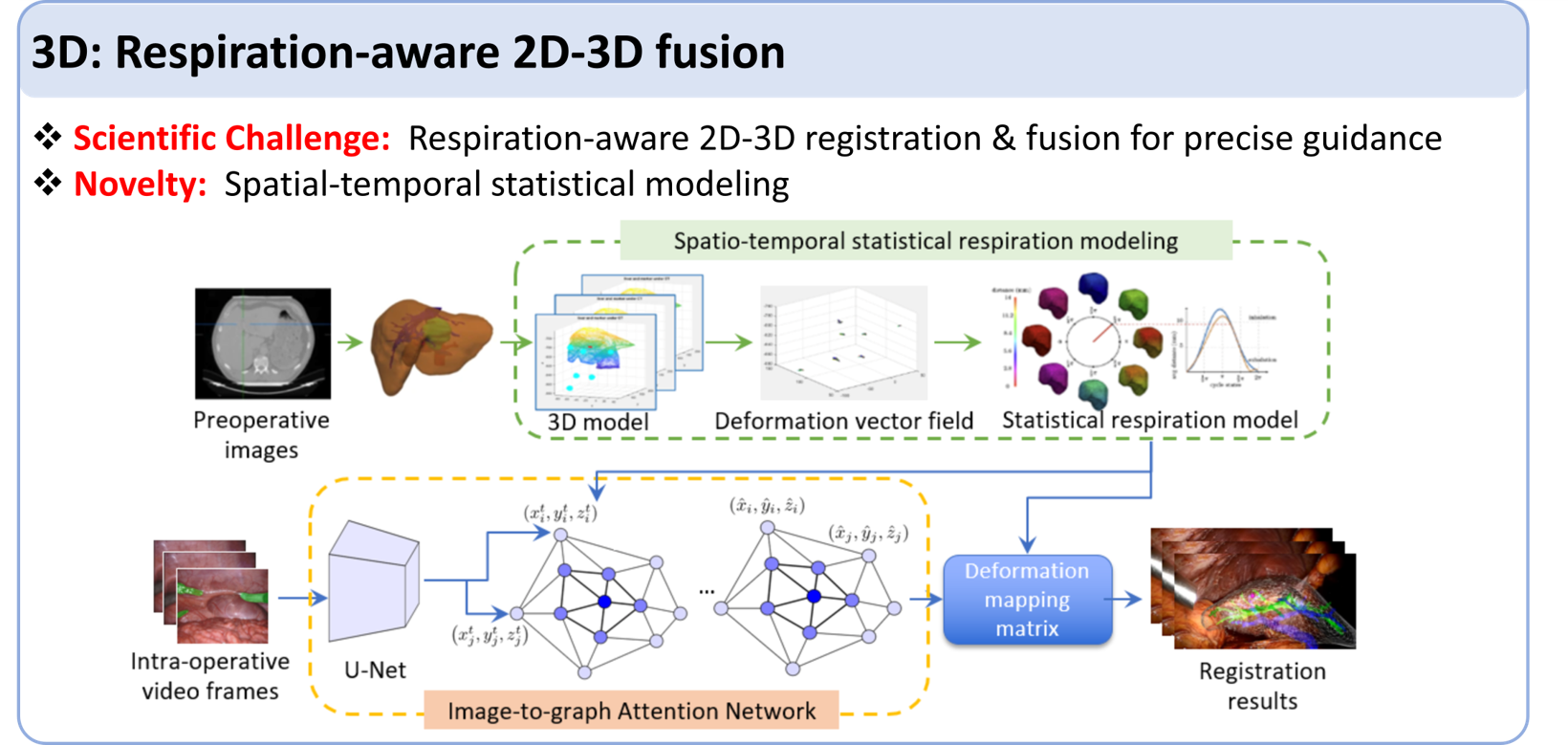

D. Respiration-aware 2D-3D registration and fusion

This task is to address the challenging task of 2D-3D registration and fusion under respiration, which is essential for the intraoperative guidance of many surgical procedures for liver cancer and kidney cancer treatment. We shall develop a novel 2D-3D registration framework, which consists of spatial-temporal statistical respiration modeling and a novel network for 2D-3D registration.

Spatio-temporal statistical respiration modeling: Considering that the motion state of the 3D anatomy is similar in each respiration cycle, we propose a statistical respiratory motion model based on periodic spatio-temporal distribution, where the 3D anatomy shapes at all corresponding times in all respiration cycles are correlated.

2D-3D registration: We propose to develop a changeable 2D-3D registration network model, namely IGAT (Image-to-graph Attention Network) to achieve accurate 2D-3D registration.

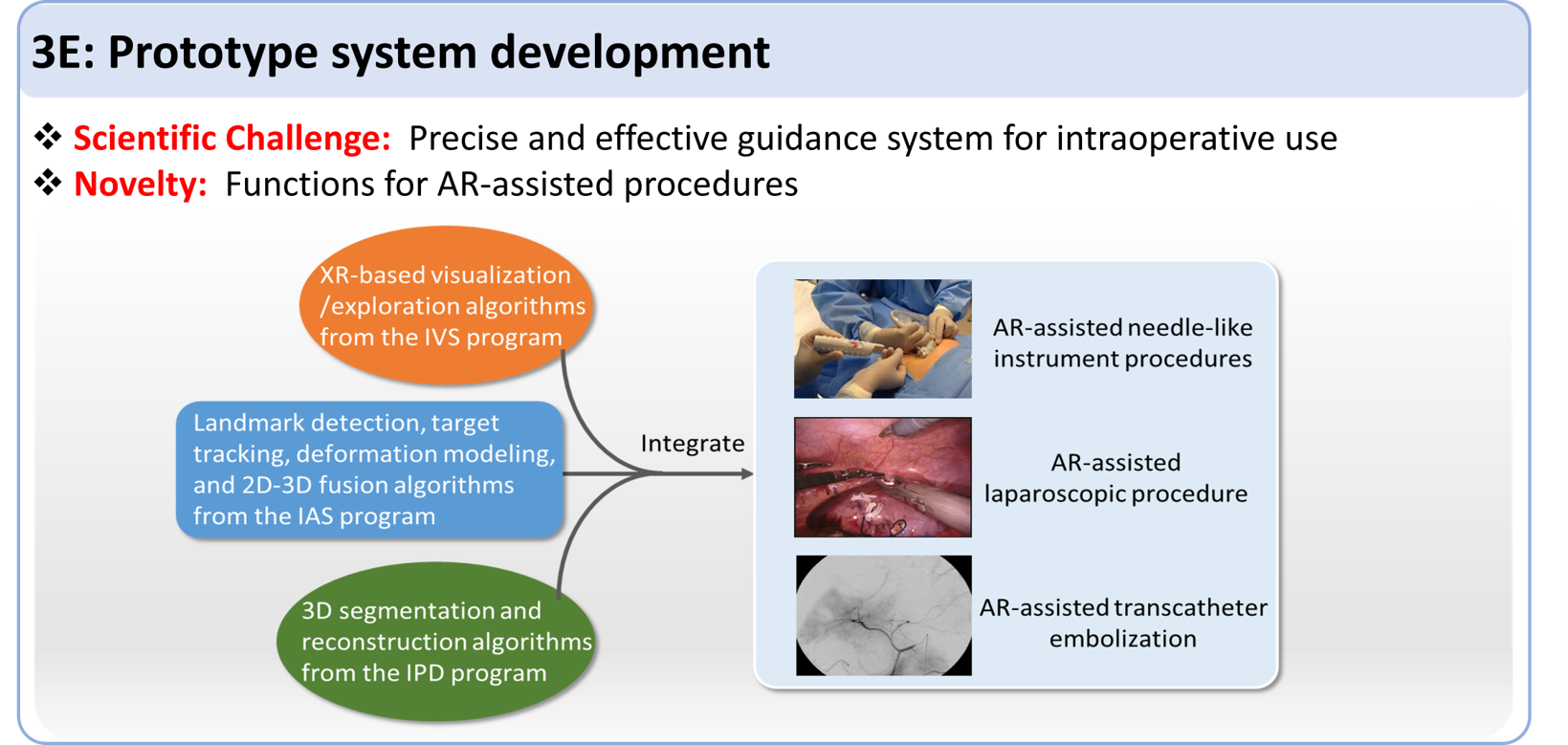

We will develop three prototypes by integrating the above-mentioned algorithms as well as the algorithms developed in the IPD and IVS programs.

(1) AR-assisted needle-like instrument procedures.

(2) AR-assisted laparoscopic procedures.

(3) AR-assisted transcatheter embolization.