In the IVS Program, we aim to build a cutting-edge XR-based platform for direct 3D examination, exploration, and understanding of 3D medical data, supported with AI-enabled visualizations and user interactions. Importantly, the platform is intended to be a “natural user interface” in an extended-reality-based (XR) environment, offering intuitive controls for the users. Going beyond existing approaches, we will consolidate AI and XR hand-in-hand to maximize the usability of the platform and to enrich the clinical workflow. To build this platform, we will leverage components in the IPD and IAS programs such as medical data segmentation, landmark detection, and tracking. The XR platform will also serve as the backbone for building some of the deliverables in the IAS program, as well as in the integrated multi-facet pipeline system.

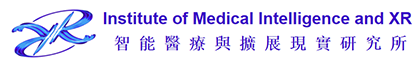

Data visualization systems help people perform tasks more effectively by providing visual data representations. Our goal is to construct an XR-based system that allows doctors and patients to understand and explore 3D medical data in the clinical workflow. This system will include three modules:

(1) 3D Reconstruction Module: This module aims to reconstruct the 3D geometry and associated internal structures of specific body parts.

(2) Data Visualization Module: This module presents the medical data and reconstructed 3D structures in a generic XR environment.

(3) Data Exploration Module: This module provides essential interaction controls for exploring and manipulating medical data, such as 3D transformations, view controls, data annotations, and data filtering.

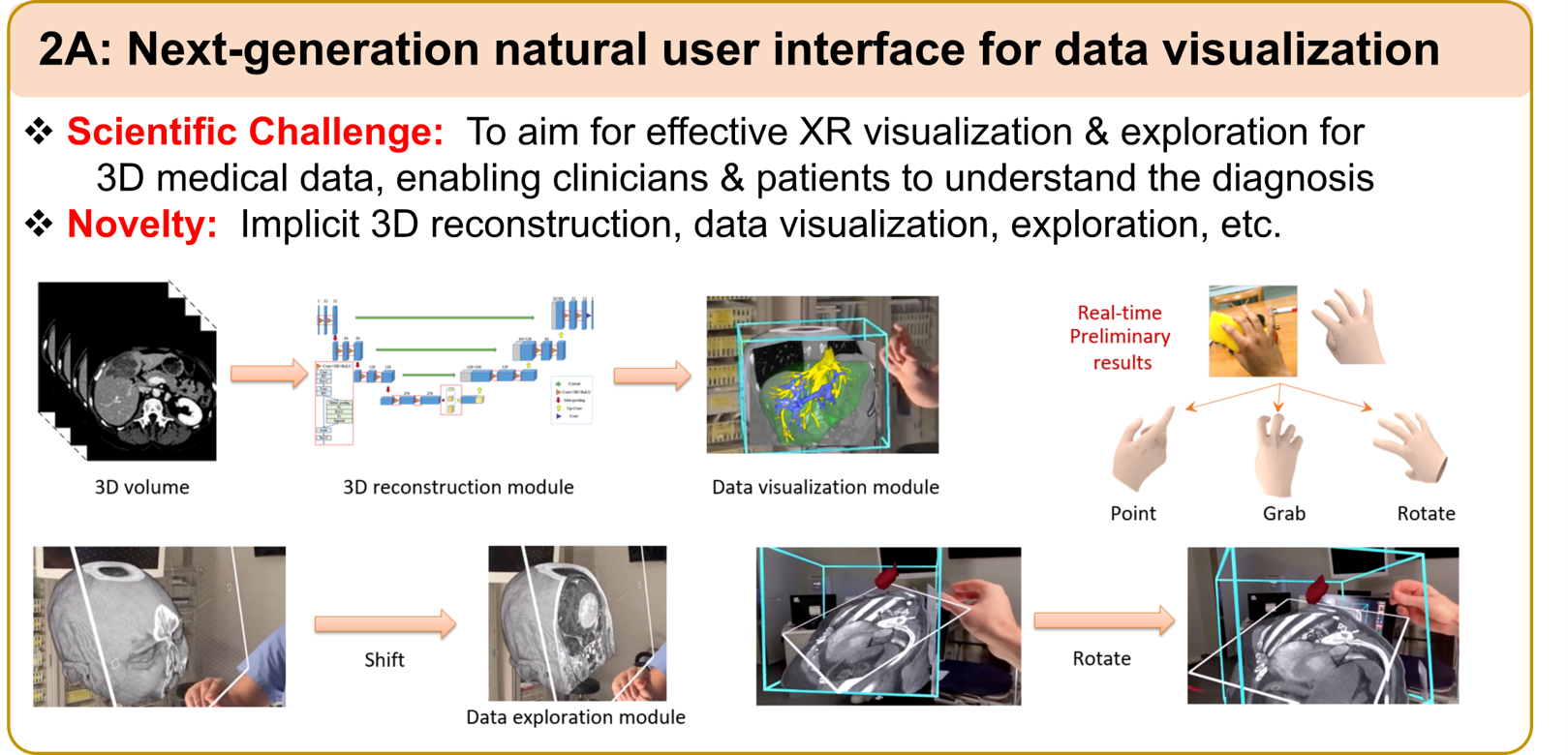

Sub-project B focuses on designing generic user-centric tools for meeting the practical needs in various stages of the clinical workflow, e.g., diagnosis and surgery preplanning.

(1) We will explore coordinated view visualization to develop a coordinated 2D/3D visualization tool for coordinated 2D-3D visual analytics.

(2) We will explore comparative visualization and design AI-enabled comparative visualization techniques to aid efficient exploration of changes in the medical data, such as after subsequent treatments.

(3) We will develop an XR-based integrated surgical simulation module for preoperative surgical planning.

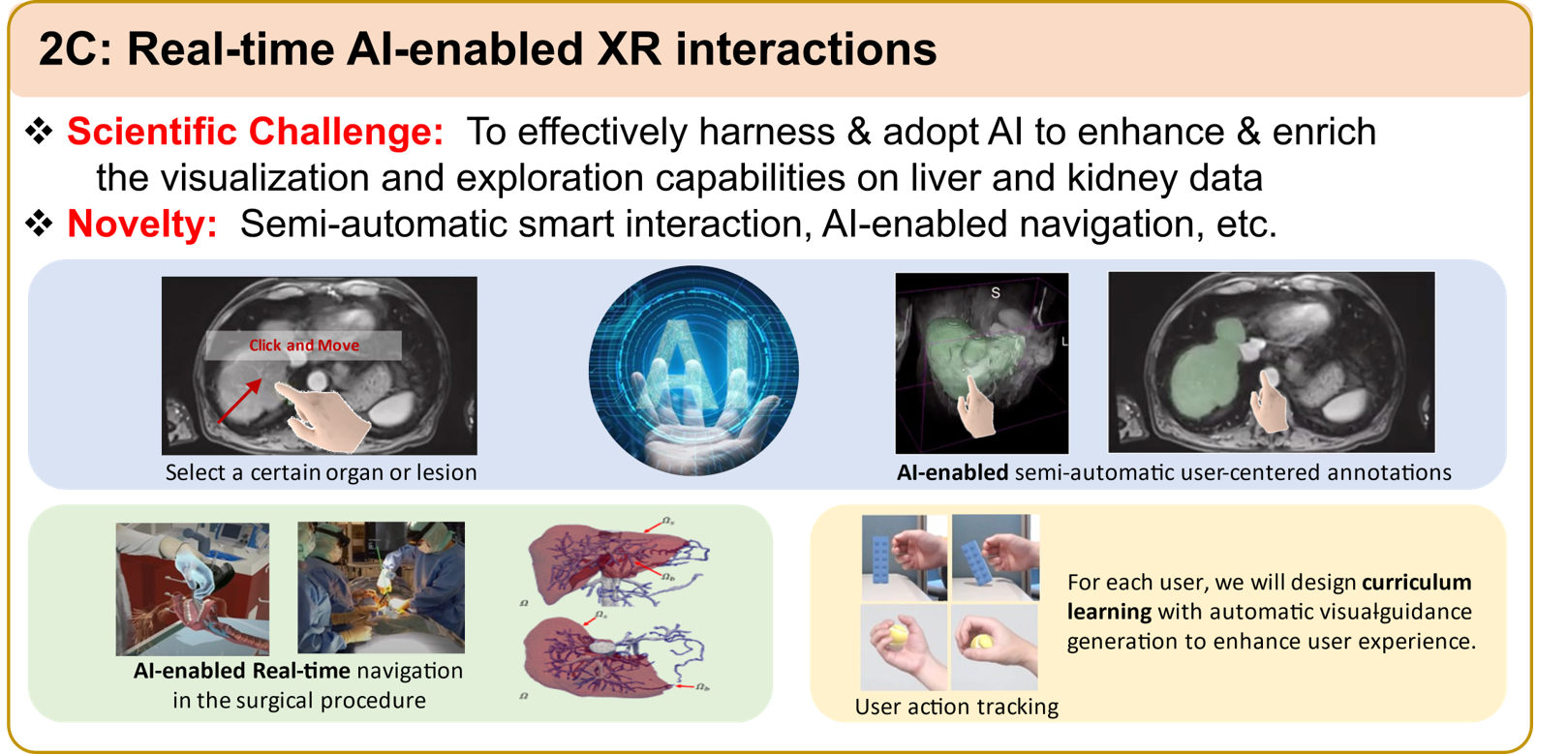

Sub-project C focuses on designing novel AI methods to enhance and enrich the user interactions. The development of AI-enabled interactions is a highly emerging research direction in the field of human-computer interaction. Typical areas that we plan to explore include:

(1) To aid data exploration, e.g., semi-automatic user-centered annotations of anatomical structures; structure-aware interactive estimation of distance, area, and volume; and salient feature detection to offer visual guidance to aid the user exploration.

(2) To aid surgical planning, e.g., biomechanics-based soft tissue deformation; simulation of blood vessels and tissue interaction with surgical needles; real-time navigation in surgical procedure.

(3) To aid surgical training, e.g., user-action tracking and assessment in surgical training; curriculum learning with automatic visual-guidance generation to enhance user experience.

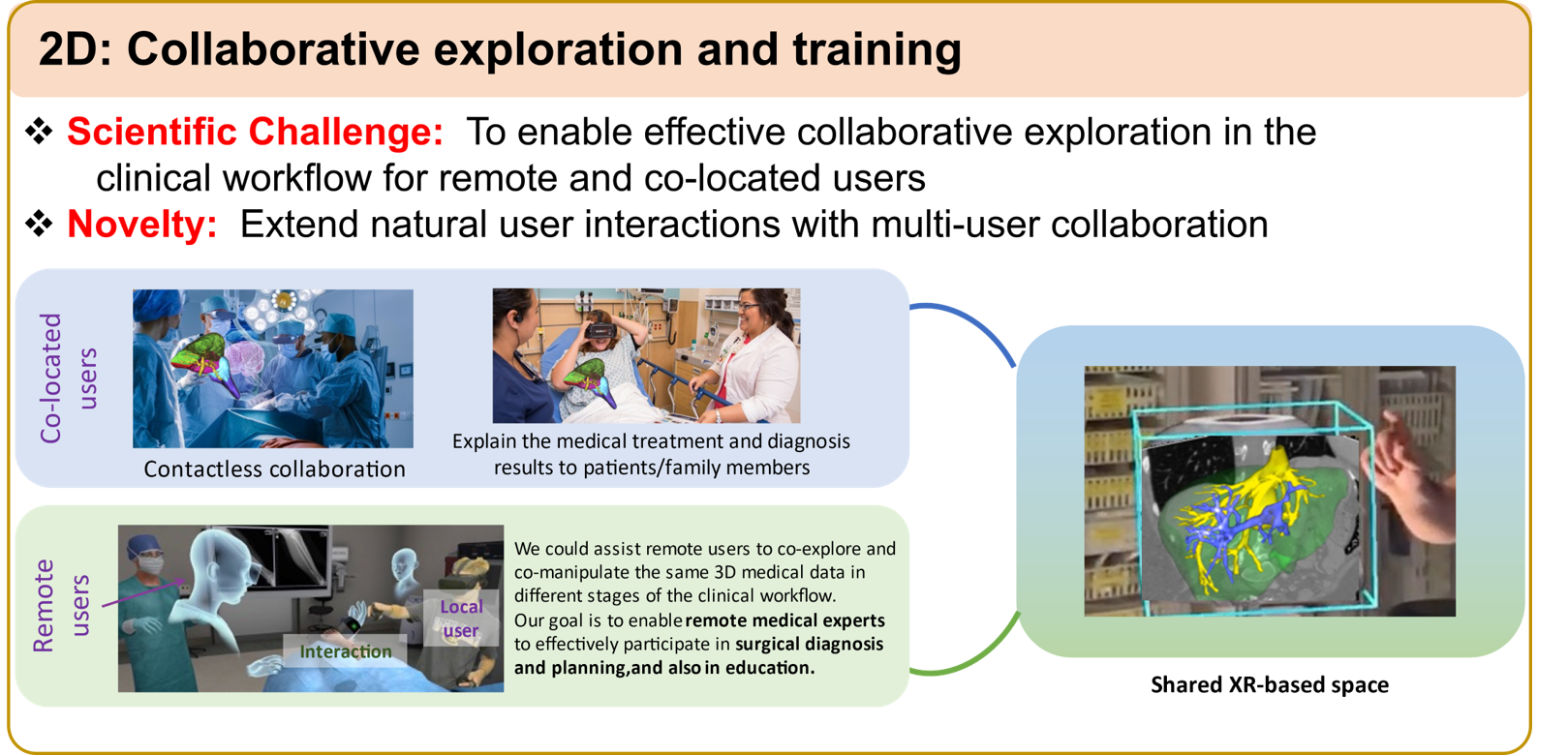

In the sub-project D, we aim to extend our environment to facilitate natural user interactions and collaboration for multiple users. Doing so will maximize the capabilities of our XR platform, since XR naturally offers a common 3D visualization space for seamless collaboration:

(1) Co-located users, for which our platform will offer contactless collaboration; here, we will explore how to enable efficient discussion of surgical plans, to facilitate training and education, and to explain the medical treatment and diagnosis results to patients.

(2) Remote users, for which we will explore tele-medicine, such that we could assist remote users to co-explore and co-manipulate the same 3D medical data in different stages of the clinical workflow. Our goal is to enable overseas medical experts to effectively participate in surgical diagnosis and planning, and also in education.